Vector Rank Theory

Vector Components

Who: Brand/Individual

What: Person,place,thing

Why: Event/action/causality

When: Timestamps past/present/future

Where: Location based, channel based, device based

In the vector space information retrieval (IR) model, a unique vector is defined for every term in every document. Another unique vector is defined for every term in every document. Another unique vector is computed for the user’s query. With the queries being easily represented in the vector space model, searching translates to the computation of distances between query and document vectors.

Vector Space Modeling

SMART (systems fr the mechanical analysis and retrieval of text), developed by Gerald Salton and his colleagues at Cornell University m was one of the first examples of a vector space IR model. In such a model, both terms and/or documents are encoded as vectors in k-dimensional space. The choice k can be based on the number of unique terms, concepts or perhaps classes associated with the text collection. Hence, each vector component (or dimension) is used to reflect the importance of the corresponding term/concept/class in representing the semantics to meaning of the document.

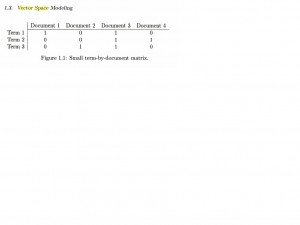

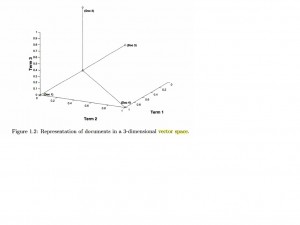

Figure 1.1 demonstrate how a simple vector space model can be represented as a term-by-document matrix. Here, each column defines a document, while each row corresponds to a unique term or keyword in the collection. The values stored in each matrix element or cell defines the frequency that a term occurs in a document. For example, Term 1 appears once in both Document 1 and Document 3 but not in the other two documents (see figure 1.1) Figure 1.2 demonstrates how each column of the 3×4 matrix in Figure 1.1 can be represented as avector in 3-dimensional space. Using a k-dimensional space to represent documents for clustering and query matching purposes can become problematic if K is chosen to the number of terms (rows in matrix Figure 1.1)

Through the representation of queries as vectors in the k-dimensional space, documents (and terms) can be compared and ranked according to similarity with the query. Measures such as the Euclidean distance and cosine of the angle made between document and query vectors provide the similarity values for ranking. Approached based on conditional probabilities (logistic regression, Bayesian models) to judge document-to-query similarities are all in the scope of VectorRank.

In simplest terms, search engines take the users query and find all the documents that are related to the query. However, this task becomes complicated quickly, especially when the user wants more than just a literal match. One approach known as latent semantic indexing (LSI) attempts to do more than just literal matching. Employing a vector space representation of both terms and documents, LSI can be used to find relevant documents which may not even share any search terms provided by the user. Modeling the underlying term-to-document association patterns or relationships is the key for conceptual-based indexing approaches such as LSI.

Efficiency in indexing via vector space modeling requires special encodings for terms and documents in a text collection. The encoding of term-by-document matrices for lower-dimensional vector paces (where the dimension of the space is much smaller than the nub,er of terms or documents) using either continuous or discrete matrix decompositions is required for LSI-based indexing. The singular decomposition (SVD) and semi discrete decomposition (SDD) are just two examples of the matrix decompositions arising from numerical linear algebra that can be used in vector space IR models such as LSI. The matrix factors produced by these decompositions provide automatic ways of encoding or representing terms and documents as vectors in any dimension. The clustering of similar or related terms and documents is realized through probes into the derived vector space model, i.e. queries.

Query matching within a vector space IR model can be very different from a conventional item matching. Whereas the latter conjures up a image of a user typing in a few terms and the search engine matching the users terms to those indexed from the documents in the collection, in vector space models such as LSI, the query can be interpreted as another (or new) document. Upon submitting the query, the search engine will retrieve the cluster of documents ( and terms whose word usage patterns reflect that of the query).

Leave a Reply